MLP-Mixer: MLP is all you need... again? ...

"MLP-Mixer: An all-MLP Architecture for Vision" - Research Paper Explained

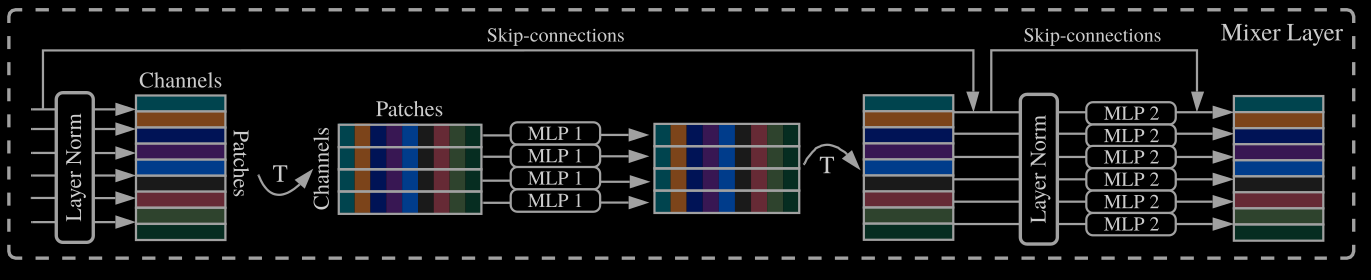

Let's try to answer the question: is it enough to have the FFN MLP, with matrix multiplication routines and scalar non-linearities to compete with modern architectures such as ViT or CNNs? No need for convolution, attention? It sounds that we have been here in the past. However, does it mean that the researchers are lost and go rounding in circles? It turns out that what has changes along the way is the increase in the scale of the resources and the data which originally helped ML and especially DL flourish past 5-7 years ago. We will discuss the paper which proves that MLP based solutions can replace CNN and attention based Transformers with comparable scores at image classification benchmarks and at pre-training/inference costs similar to SOTA models.

more ... Michał Chromiak's blog

Michał Chromiak's blog